I had a problem, like a lot of other admins, caused by changing volumes in cluster. In my case the origin was a new storage device. After I moved all machines and other content to the new device, all the things were working well. The surprise arrived when I disconnected the old one –the live migrations simply stopped to work. Impossible? Well. Let’s go step by step.

The origin of the problem is the configuration of Veeam backup software as is described in Veeam KB2194: “If you have shared VHDX, you have to define ConfigStoreRootPath parameter…”. After the execution of PowerShell cmdlet, I was very happy – no warning in Veeam, backup was running – all was working perfect. At this point, I just want to remember that we are working with PowerShell on Microsoft cluster and adding Microsoft parameter (asked from Veeam).

Later, when I changed my SAN, both SANs were connected to my cluster. So, I had Volume1, Volume2 and Volume3 from the old SAN device and Volume4, Volume5 and Volume6 from the new SAN device. It couldn’t be different as I need to do storage migration. Let’s say that value of ConfigStoreRootPath is pointing to folder located on Volume1 (or some other volume on old SAN).

When I completed the migration, the last step was to disconnect the old SAN and this step broke the ConfigStoreRootPath configuration. Don’t panic; it is a PowerShell configurable value. Normally you can change it, but not in this case. You will receive an error as Microsoft did not predicted that this can be done. It is not possible to change or delete the value.

I opened a case on Microsoft and explained them what I did and ask them how to solve the problem. The answer was “Well, this is a Veeam parameter. It is actually configurable thru PowerShell and we support this configuration, but we do not support to change this value. Call Veeam.” Of course, I opened another case on Veeam and I had the answer: “Well, it is true that we use this setting, but it is a Microsoft setting and they should resolve this issue. It is not our problem.”

AT this point I was frustrated, but I found a “solution” on Microsoft forums. Microsoft engineer told me that it should work, but the problem is that you have to shutdown the entire cluster and change the configuration manually in Regedit. It didn’t work for me!

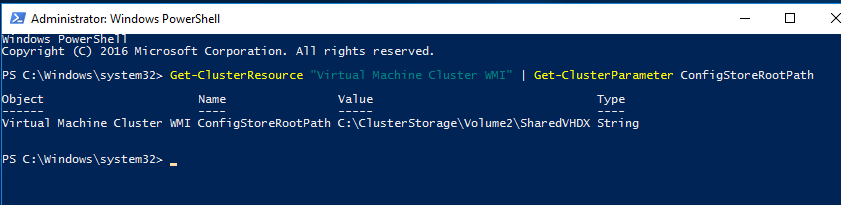

My solution: If you are not sure how it was originally configured, you can check it with PowerShell cmdlet: Get-ClusterResource “Virtual Machine Cluster WMI” | Get-ClusterParameter ConfigStoreRootPath

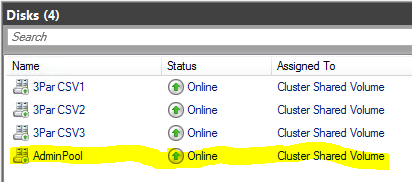

I don’t know if I can call it solution or maybe is better workaround. It. Is very simple to do it. I created a new LUN on storage – just 1GB, it doesn’t need to have a lot of space and present it to the cluster. I created a normal CSV volume as you can see on the picture, but for now don’t use it or put any file on it

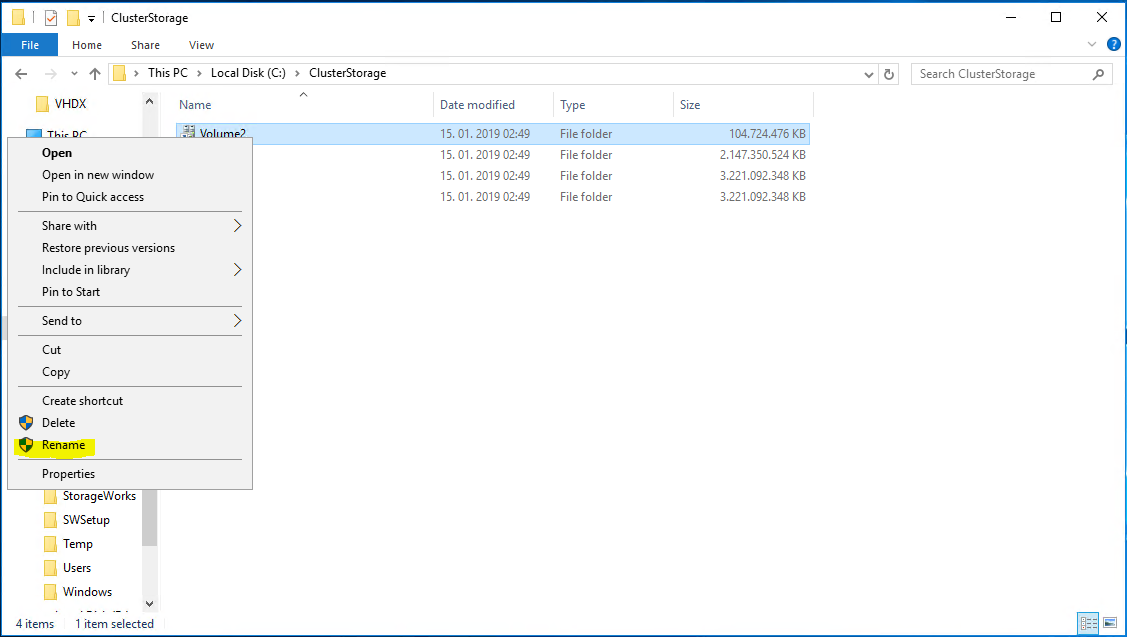

When you have your new volume, you can go to the File Explorer on the node which is the owner of this CSV and browse to C:\ClusterStorage. There you can see all the volumes (in my case Volume4, Volum5, Volume6 and Volume7). Now you can just rename Volume7 to Volume1 (same name that you have on the original location).

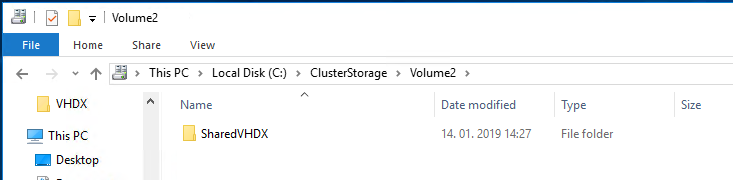

Last simple action you have to do is to create a folder with the same name as you had on the original volume.

The work is done, now you have exactly the same path as is specified in parameter. Live migrations are working. You don’t need to restart servers or cluster service or something else. You just need that path defined in ConfigStoreRootPath exists. In this path system will create some files and folders, but don’t worry about; you don’t need to copy the old data.

At the end, if you have enough space on CSV and free time, you can move all data from one volume to the other, rename that volume and move data back. You can do this volume by volume for all of them. It will not change the functionality, but in the end it will be nicer – you will have Volume1, Volume2 and Volume3 again.

One comment found on “

One comment found on “

Thank you for creating this! You might add the error that Veeam was giving for the Google algorithm to associate it with that. I was able to find your post because of the related KB article from Veeam, and didn’t even realize we had a live migration issue until I read this!

The error that we were getting from Veeam is “Cannot find Hyper-V virtual machine group information on host cluster.domain.local”