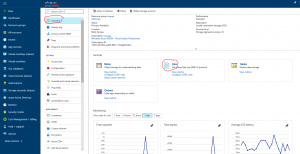

Azure File Services (AFS) is new service in Azure, currently in public preview. From my perspective, it is a service with very strong fundamentals and a granted future.

What can we do? What are objectives? Well, we are producing more and more data every day, we are building every day new datacenters (on premise), open new corporate locations and this are all reasons why we have problems with disk space and syncing data around the world.

AFS is a technology dedicated to solve these problems and help us to have more control on our data and hardware usage. We can use AFS in various modes or combinations:

- We can sync a server or cluster to Azure and duplicate all files from local storage to Azure – just because we want to have additional security or additional access point (Azure file share)

- We can sync a server or cluster to reduce our hardware needs. We have locally stored only files that we use frequently; all other older files will be present only in Azure and we don’t need disk space for them. This is tiering space where we can write our rules how files will go to the cloud and they disappear from local storage. In this case, if we need a file that is present only in Azure, we can see it on local storage (grayed icon) and the file is transferred locally from Azure in a moment that we click on it – now it is located also on local storage and is under AFS rules.

- We can sync more servers (clusters) in different datacenters across the world like DFS. Sync is done through Azure services and all files will exist on Azure (not necessary on premise), so Azure is in this case the new file store. Of course, because different locations work with different files, on premise content can (will) vary from server to server. We cannot expect that all server will have the same files stored on local storage and there will not be a point where you can find all your files together except on Azure storage.

Using this technology will change your environment, your way of thinking about some operations that are now clear and from this reason it is very important to know what and how will be impacted. For sure the most important thing that have to be changed is the backup. You have to know, that you have all files only in Azure, so backup has to be done there. If you want to do backup locally, there will be a problem because you will access to any file every time you will do a backup and those files will remain on premise – as frequently used files.

We have a nice short video for AFS. You can watch it here.

How to establish which files are good for AFS technology?

It depends from your usage, company infrastructure and of course file types. First, you have to identify files or shares. In some cases, maybe you will replace DFS technology with AFS (your users use different files in different locations and there is no need to have anywhere all files stored locally). Maybe you have a large number of old files (I am thinking about my client – advertising agency – they have really many old projects that they need to be stored in archive, but they practically never use). This are some cases where you can use ASF. You will have a good and long retention policy in Azure, you don’t need to care about backups, disk spaces … This is very important and is money value – also for an administrator.

It is difficult to establish the AFS?

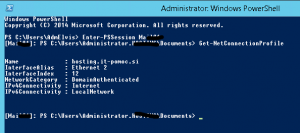

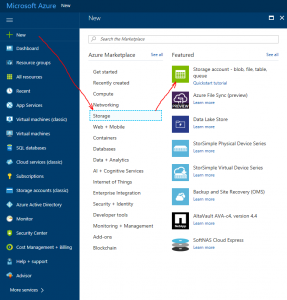

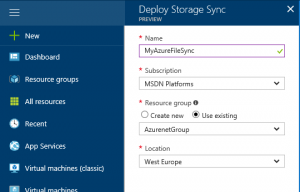

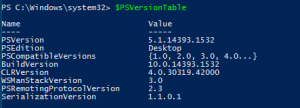

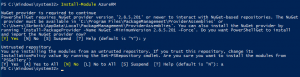

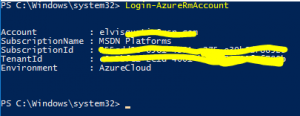

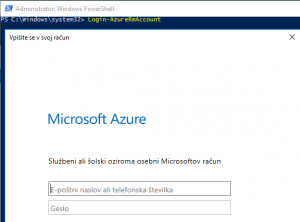

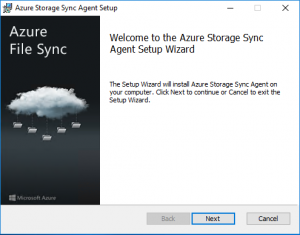

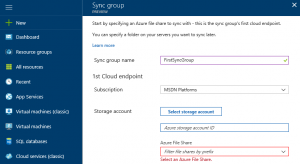

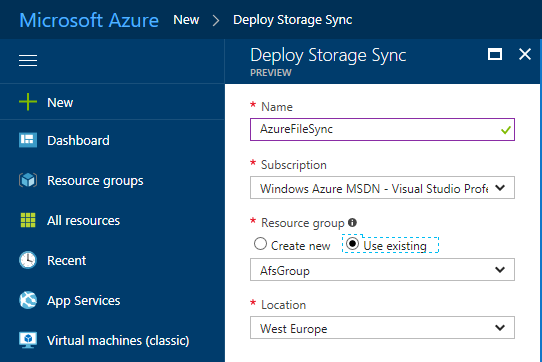

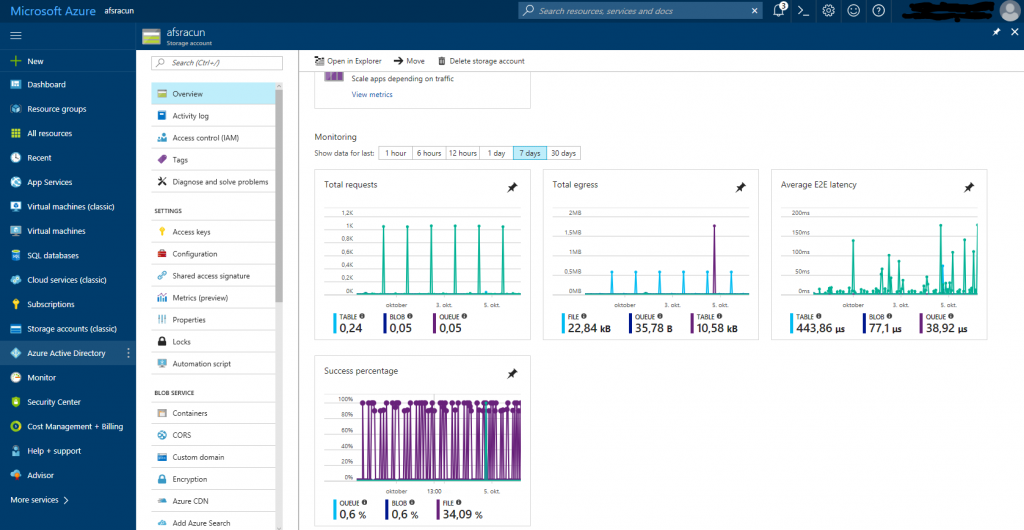

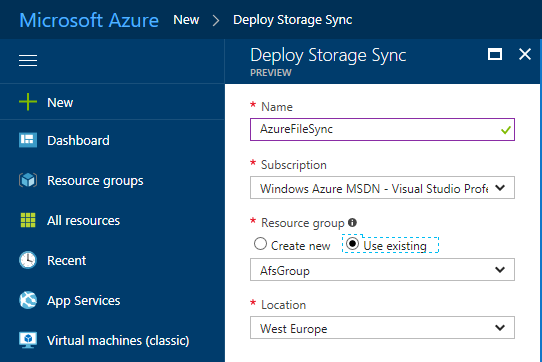

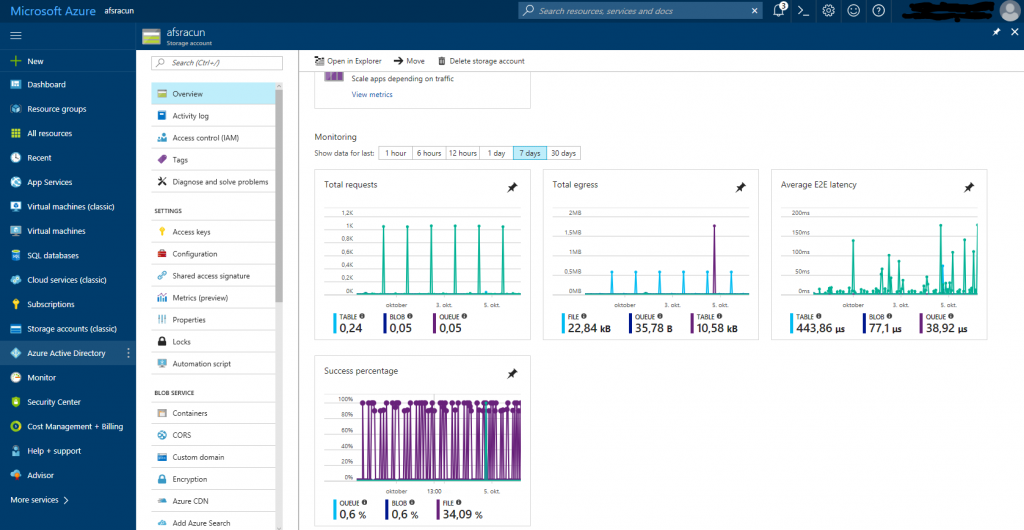

No. I can say that is simpler than build some DFS infrastructures. In short, you just need to install AFS agent on server, create Storage account and AFS service in Azure and connect both ends. For few servers, you will be able to do it in few hours. But here you have to know, that synchronization will take some time and to have a complete infrastructure up to date and working, it will take longer; depend on data amount and internet bandwidth. If you will try to test it, just take your time, go slower, wait for steps to complete and you will be happy with the results.

I will write a post in few days with step by step instructions how to connect a server to AFS and make all working.

For me, this technology can be used in very small companies in one way and in large companies in another way. It is very flexible, with very large specter of usage and different solutions. I am sure that this approach is the best way to have a lot of implementations, successful stories and satisfied customers. This is what we want to do and I am sure that is done very well yet.